Love lessons: 3 tips and tricks for Databricks users

It’s that time of year again, love is in the air. Last year Databricks stole my heart, wowing me with its scalability, ease-of-use and integration with the Microsoft and Amazon cloud platforms. Considering these impressive features, it should come as no surprise to hear that I’m still very much in love! It would be my pleasure to once again ask Databricks to be my Valentine for 2021.

Last time, I talked about what first drew me to the technology. Now, after one year, I thought it would be appropriate to share some tips which have helped me stay firmly in love with Databricks.

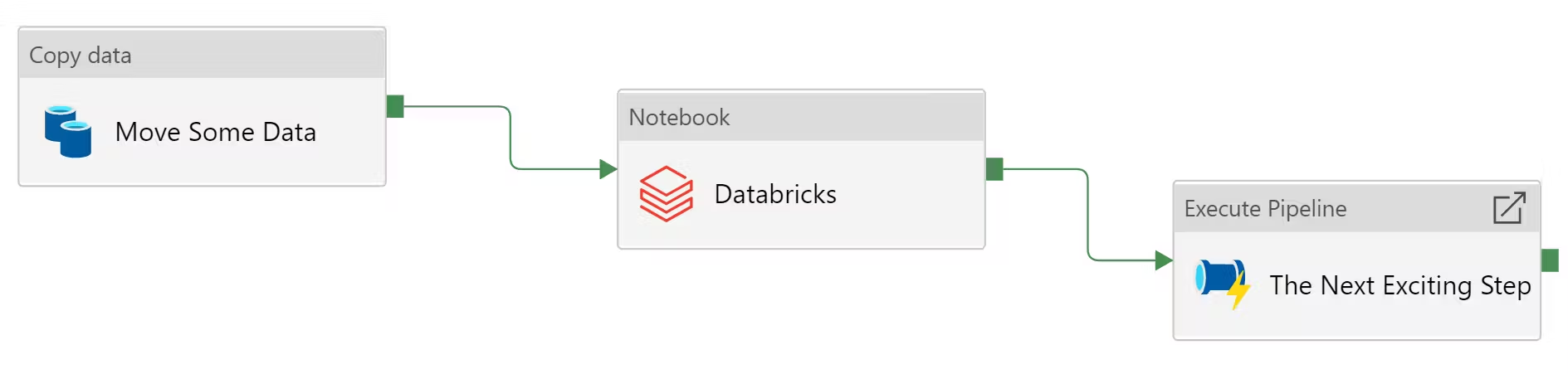

1. Schedule jobs with Azure Data Factory

To take my relationship with Databricks to the next level, I wanted to be sure that it would integrate seamlessly with my existing data pipelines.

I’ve been using Databricks in Azure, where Data Factory is the go-to data integration service. Fortunately, linking in Databricks jobs to data workflows here is an easy task. This link to Data Factory allows me to ensure my Databricks jobs always run at the appropriate time to complement the other moving parts in my Azure landscape.

2. Maintain a utility library

Any solution which allows you to write your own code gives you a lot of flexibility, but also power. And with great power, comes great responsibility.

If you want to use Databricks for your data engineering pipelines, it follows that you’d want to adhere to certain internal standards – like naming conventions, where to save your raw and enriched datasets, and more. One way which I find effective for managing this is to create your own organisational utilities library to install on your Databricks clusters (I’ve done this with Python, but the same logic holds for Scala). Having a library available to hold common functions, like reading and writing data, not only reduces code duplication; it also enforces consistency, allows you to implement validation rules, and can hide away code which could otherwise look messy.

3. Try Koalas

One of the biggest pain points I see with data scientists and engineers who are new to Databricks is getting familiar with the nuances of PySpark, which they may not have used before.

Most data scientists and engineers will be familiar with Pandas. Like its namesake bear, Pandas is very popular and easy to use (/cuddly) – but it isn’t scalable for big datasets. The performance and scalability of Spark is one of the major selling points of Databricks, but, unfortunately, PySpark just isn’t as warm and fuzzy as Pandas.

Enter Koalas. Another cuddly bear, but this one is more agile and runs on Spark. Koalas is a library specifically designed by Databricks to help those already familiar with Pandas take advantage of the performance Spark has to offer. The Koalas library provides Data Frames and Series that match the ones in Pandas, so any code which runs with Pandas can easily be changed to Koalas and be run with Spark.

* Nothing to do with the Koalas library. He's just cute!

When’s the wedding?

Currently, it seems Databricks is more popular than ever. More customers are asking me about it and I’m increasingly seeing it as a core element of any Azure data platform. The core principles of Databricks, the power of Spark, the flexibility of notebooks, and its position in the Azure and AWS landscapes still hold significant value. Plus, the love which has gone into building this platform is still very much apparent. Databricks is here to stay.

If you’d like to start your own Databricks love story, or are interested in ways which you can make the most of your existing clusters, feel free to drop me an email with any comments or questions at jlawton@aiimi.com, or connect with me on LinkedIn.

Stay in the know with updates, articles, and events from Aiimi.

Discover more from Aiimi - we’ll keep you updated with our latest thought leadership, product news, and research reports, direct to your inbox.

You may unsubscribe from these communications at any time. For information about our commitment to protecting your information, please review our Privacy Policy.